Authors

S. Perez, P. Arroba, J.M. Moya

Journal Paper

http://www.ccs.upm.es/wp-content/uploads/2022/03/1-s2.0-S0167739X21002934-main.pdf

Publisher URL

https://www.sciencedirect.com/science/article/pii/S0167739X21002934

Publication date

December 2021

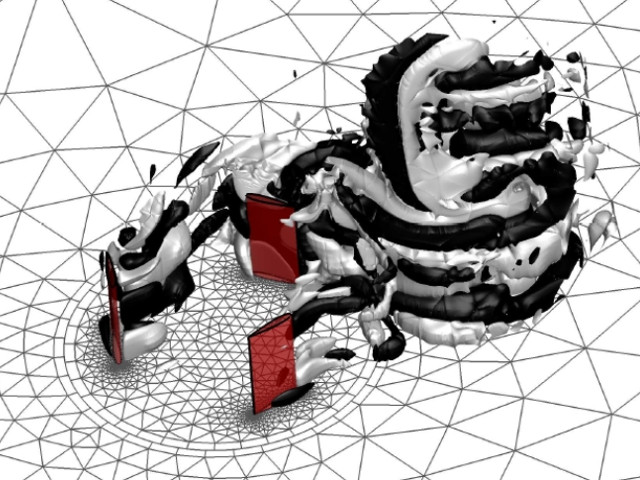

Until now, the reigning computing paradigm has been Cloud Computing, whose facilities concentrate in large and remote areas. Novel data-intensive services with critical latency and bandwidth constraints, such as autonomous driving and remote health, will suffer under an increasingly saturated network. On the contrary, Edge Computing brings computing facilities closer to end-users to offload workloads in Edge Data Centers (EDCs). Nevertheless, Edge Computing raises other concerns like EDC size, energy consumption, price, and user-centered design. This research addresses these challenges by optimizing Edge Computing scenarios in two ways, two-phase immersion cooling systems and smart resource allocation via Deep Reinforcement Learning. To this end, several Edge Computing scenarios have been modeled, simulated, and optimized with energy-aware strategies using real traces of user demand and hardware behavior. These scenarios include air-cooled and two-phase immersion-cooled EDCs devised using hardware prototypes and a resource allocation manager based on an Advantage Actor–Critic (A2C) agent. Our immersion-cooled EDC’s IT energy model achieved an NRMSD of 3.15% and an R2 of 97.97%. These EDCs yielded an average energy saving of 22.8% compared to air-cooled. Our DRL-based allocation manager further reduced energy consumption by up to 23.8% in comparison to the baseline.